Nvidia continues to set the bar higher. In his CES keynote on Monday, the chip giant’s CEO Jensen Huang announced the launch of the Vera Rubin supercomputing platform, capable of delivering five times the compute power of the last-generation chip and arriving months ahead of schedule.

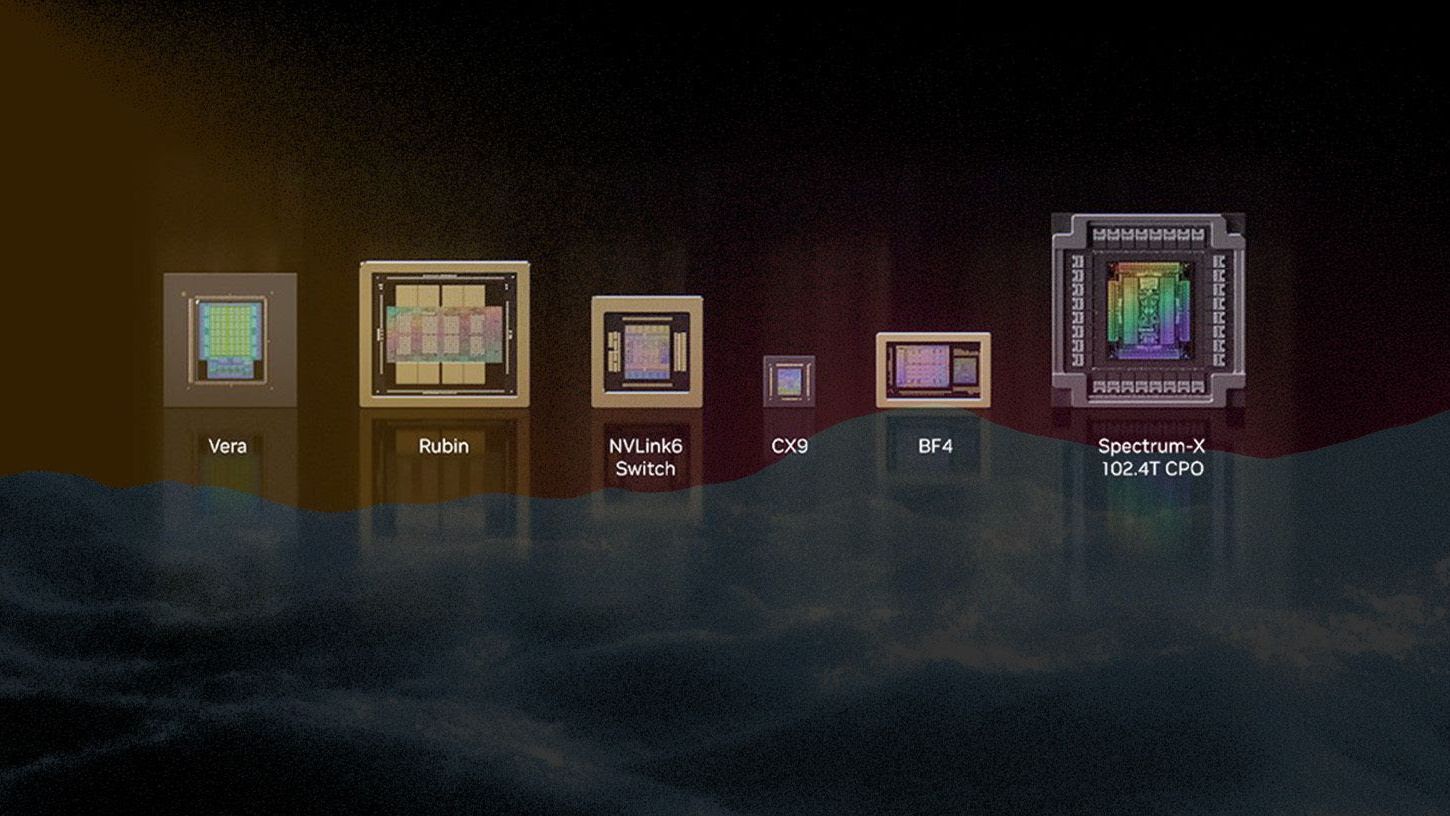

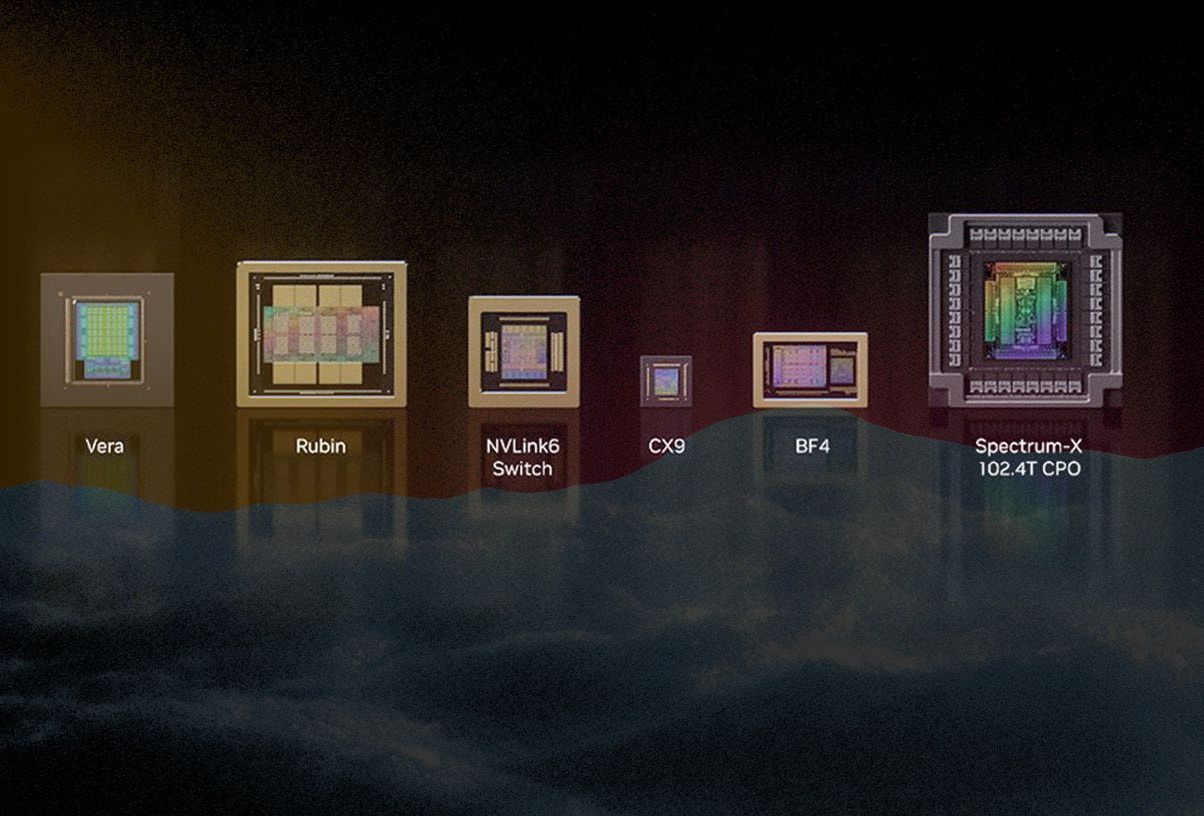

Huang said that the Vera Rubin platform is now in “full production,” and will be available to customers in the second half of 2026. Vera Rubin is composed of six chip types, 1152 GPUs in total across 16 server racks, that work in concert to reduce training time and inference costs.

- The platform is particularly fit for agentic AI, advanced reasoning and massive-scale mixture-of-experts, a machine learning concept that draws on multiple specialized models.

- Huang touted the Rubin platform’s ability to do more with less: it cuts inference token costs by up to 10 times and requires 1/4 as many GPUs to train mixture-of-experts models compared to its current Blackwell platform.

“The amount of computation necessary for AI is skyrocketing,” Huang said in his keynote. “The demand for NVIDIA GPUs is skyrocketing. It's skyrocketing because models are increasing by a factor of 10, an order of magnitude every single year.”

Nvidia has been talking about Vera Rubin for a while now. The company first teased the platform at the Computex conference in 2024, and laid out a clearer roadmap for the tech at its GTC conference in March 2025. However, the platform wasn’t expected to be released until mid-2026, making Huang’s announcement on Monday six months ahead of schedule.