Can ChatGPT age checks keep kids safe?

January 21, 2026

Welcome back. I'm excited to introduce a new member of The Deep View team, Senior Reporter Sabrina Ortiz. Sabrina has been covering the AI revolution with incredible energy and insight over the past three years. At The Deep View, she will continue to cover AI's biggest questions, most important developments, and industry-shaking events. She will be writing news, posting on social media, hosting podcasts, moderating panels, and much more. If you're not already following Sabrina on LinkedIn, X, and Instagram, then this is a great moment to go and do it! —Jason Hiner

IN TODAY’S NEWSLETTER

1. Can ChatGPT age checks keep kids safe?

2. X breaks precedent by open-sourcing its algorithm

3. Microsoft makes a bid to run physical AI

POLICY

Can ChatGPT age checks keep kids safe?

At long last, OpenAI is rolling out its way to judge whether or not you are smarter than a fifth grader.

On Tuesday, the company announced the launch of its age prediction systems on ChatGPT consumer plans. This tech will help determine whether an account belongs to someone under 18, enabling proper safeguards for those users. The tech will be rolled out in the EU in the coming weeks to account for regional laws.

While teens who identify themselves as underage will automatically have safeguards in place, OpenAI’s model uses a combination of behavioural and account signals, such as how long an account has existed, typical usage times of day, activity patterns over time, and how old the user claims to be.

Accounts that are detected as being minors will have limited exposure to sensitive content, such as violence and sexual content. Users who are misidentified as being under 18 can restore full access by taking a selfie through “Persona,” a service that verifies identity.

“When we are not confident about someone’s age or have incomplete information, we default to a safer experience,” the company said in its blog post.

The company first announced this age prediction system in fall 2025 as part of a broader update to its policies regarding the safety of young users. The debut marks the latest in a string of initiatives aimed at minors:

In late September, the company announced Parental Controls for teen accounts on ChatGPT and Sora, which allow parents to link their accounts to their teens and customize the types of content their teens can generate. These controls allow parents to set quiet hours, turn off memory and opt out of model training.

And in early January, the company announced a partnership with advocacy organization Common Sense Media to support ballot initiatives that require companies to implement “age assurance technology” to protect young users.

OpenAI’s initiatives also follow months of turmoil and backlash for the risks that both its flagship chatbot and others pose to minors. OpenAI Alone is facing multiple lawsuits alleging that ChatGPT is culpable for emotional manipulation and deaths of multiple people by suicide. Google and Character.AI, meanwhile, settled a lawsuit earlier this month with similar allegations.

In an X post responding to Elon Musk regarding ChatGPT being linked to deaths, OpenAI CEO Sam Altman said that almost one billion people use ChatGPT, some of whom are in “very fragile mental states.”

“It is genuinely hard; we need to protect vulnerable users, while also making sure our guardrails still allow all of our users to benefit from our tools,” Altman said.

While tech like this is a step in the right direction (and serves as damage control), the bigger question is whether it actually works. Today's AI is still far from perfect, with a tendency to hallucinate and make mistakes. And we’ve already seen that wreak havoc in age verification systems such as Roblox, which misidentified kids as adults and vice versa. The question remains whether these kinds of risks can be controlled at all while allowing young users to harness the technology, and what responsibility these firms have in safeguarding the systems.

TOGETHER WITH MONGODB

How Thesys Is Building The Future of GenAI with MongoDB

Generative AI is, as the cool kids would say, “having a moment” – from whipping up stories and images on ChatGPT to “vibe coding” with Claude, everyone and their mother is getting in on the fun. The only hard part is building the platform… which is where Thesys comes in.

Thesys has created an API middleware that allows developers to ship AI-native, context-aware interfaces with ease. How’d they do it? By relying on the MongoDB Atlas database for its operational backbone, which offers the kind of flexibility, structure, and reliability Thesys needs to win – and they aren’t the only ones. In fact, MongoDB just announced the winners to their Jan 10th Agentic Orchestration and Collaboration hackathon at the Midway in San Francisco, where countless attendees built on their platform in an effort to win over $30,000 in cash prizes and the chance to win an NVIDIA GPU 5080. Not too shabby.

Want to see how your business can use MongoDB for Startups to build, scale, and succeed? Apply to join right here.

PRODUCTS

X breaks precedent by open-sourcing its algorithm

Since Elon Musk’s Twitter takeover, many people have claimed the algorithm change ruined the platform. Musk, now admitting the “algorithm is dumb and needs massive improvements,” has made the algorithm open-source.

In an X post announcing the plan a couple of weeks ago, Musk said the repository would be updated every four weeks and include comprehensive developer notes so people could easily identify what changed. With Monday’s launch, Musk said making the model open-source would allow users to see the company struggle in real-time as it attempts to improve the model.

Specifically, the open-source GitHub repository contains the X algorithm that determines what shows up on your “For You” feed on the platform — the content you find organically on your homepage.

The model overview in the repository details how the algorithm works. A high-level look shows that the algorithm takes into account both in-network content (content from accounts you follow) and out-of-network content (discovered through ML-based retrieval), then ranks it using Phoenix, a Grok-based transformer model that predicts engagement probabilities for each post. However, if you dive deeper into the repository, you can learn exactly how it works, and people are taking to X to post the breakdowns of their findings.

The bigger impact, however, is that this is the first social media platform to openly post its proprietary algorithms, which helps with transparency, as users can learn exactly why information is being served up to them and even suggest tweaks to popular issues.

Open-sourcing the model also helps spur innovation, as developers trying to launch similar platforms better understand and potentially learn how to do so on their own. Twitter is not a stranger to copycats, with the X rebranding sparking competitors such as BlueSky and Threads.

Open-sourcing the model continues a trend we've seen recently: companies offering more transparency and user control over the AI algorithms powering their media consumption. For example, with the launch of Sora 2 and its accompanying social media app, OpenAI unveiled a new type of recommender algorithm trained on natural language. Similarly, Instagram unveiled a feature in which you can use AI to personalize your Reels feed by selecting what you’d like to see more of.

TOGETHER WITH GRANOLA

The AI Notepad That Finally Makes Meetings Useful

This app that might actually make you love meetings.

Meetings aren’t the problem. The challenge is everything that comes after them.

With Granola, the AI Notepad for people with back-to-back meetings, you can avoid context switching, the cognitive load of remembering what you promised … and the stress of knowing something important slipped through.

Meetings are no longer about scrambling to keep up. With Granola, they are about connection. They lead somewhere.

Take notes the way you always have. Granola works in the background, turning conversations into clear summaries, action items, and next steps.

Before, during, or after a meeting, you can chat with your notes to quickly understand what needs to happen, write follow-ups, or share context with others.

Download Granola for free and use it in your next meeting using the code THEDEEPVIEW

BIG TECH

Microsoft makes a bid to run physical AI

As AI skyrocketed in popularity in recent years, we have seen various evolutions of the tech, such as AI agents, become massively popular. The hottest new buzz trend is Physical AI, and Microsoft is jumping into the fray.

Broadly speaking, Physical AI can be described as hardware that goes beyond what robots already do by perceiving the environment and then using reason to agentically perform or orchestrate actions. Microsoft’s first set of robotics models, Rho-alpha, translates spoken commands into actions for robotic systems performing bimanual manipulation tasks, such as using both hands at once.

The models, derived from Microsoft's Phi series, go a step further from the traditional vision-language-action models (VLAs) by adding tactile sensing, or the ability to understand physical cues. For instance, the company shares that efforts are underway to enable it to sense modalities such as force. That capability could be helpful in real-world scenarios, such as stopping a movement if someone is in the way.

Rho-alpha also enables robots to learn from the feedback given by people, which allows them to continue to learn on the job much like a person. Ultimately, Microsoft says the goal is to make physical systems more adaptable, both adjusting to their environment and people’s requests, making them more trustworthy. To achieve this goal, Ro-Alpha was trained on physical demonstrations, simulated tasks, and web-scale visual question-answering data, according to the company.

Lastly, Microsoft is tackling the scarcity of high-quality simulated data that accurately captures reality by having its training pipeline generate synthetic data using the open-source NVIDIA Isaac Sim framework. Those interested in using physical AI foundations and tools can join Microsoft's Research Early Access Program.

AI models have become exponentially more capable over the past 12-24 months, yet their usefulness has not kept pace because they've been limited to users’ computer screens. Physical AI is an attempt to bridge the physical world with advanced AI capabilities to unlock new use cases that could advance its usefulness by leaps and bounds. As a result, the developments being done to improve both training and agentic capabilities are significant. Nvidia took a similar approach at CES, unveiling new models and benchmarking solutions to drive physical AI forward, with CEO Jensen Huang even saying, “The ChatGPT moment for robotics is here.”

LINKS

DeepMind CEO Demis Hassibis says there are no plans for ads in Gemini

Davos: Microsoft CEO Satya Nadella says AI needs to work for society

OpenAI, ServiceNow sign three-year deal to bring models to ServiceNow platform

Human-centric frontier AI lab humans& emerges from stealth with $480 million

Legal AI startup Ivo raises $55 million at $355 million valuation

Applied Compute in talks to raise funding at $1.3 billion valuation

Verata: Helps private firms source proprietary deals by mapping their network and finding hot companies.

LTX Audio-to-Video: A new video generation model with consistent voices and control over performance.

Manus: The AI agent tool has now launched app publishing directly to the Google Play Store and Apple TestFlight

Crow: An agentic AI application that lets you control apps through chat.

MongoDB: Staff Developer Advocate, AI/ML

Faction: Founding Engineer (Applied AI)

Rebel Space Technologies: Machine Learning Engineer

Apple: Sr. AI Applications Engineer - Vision Products Software

POLL RESULTS

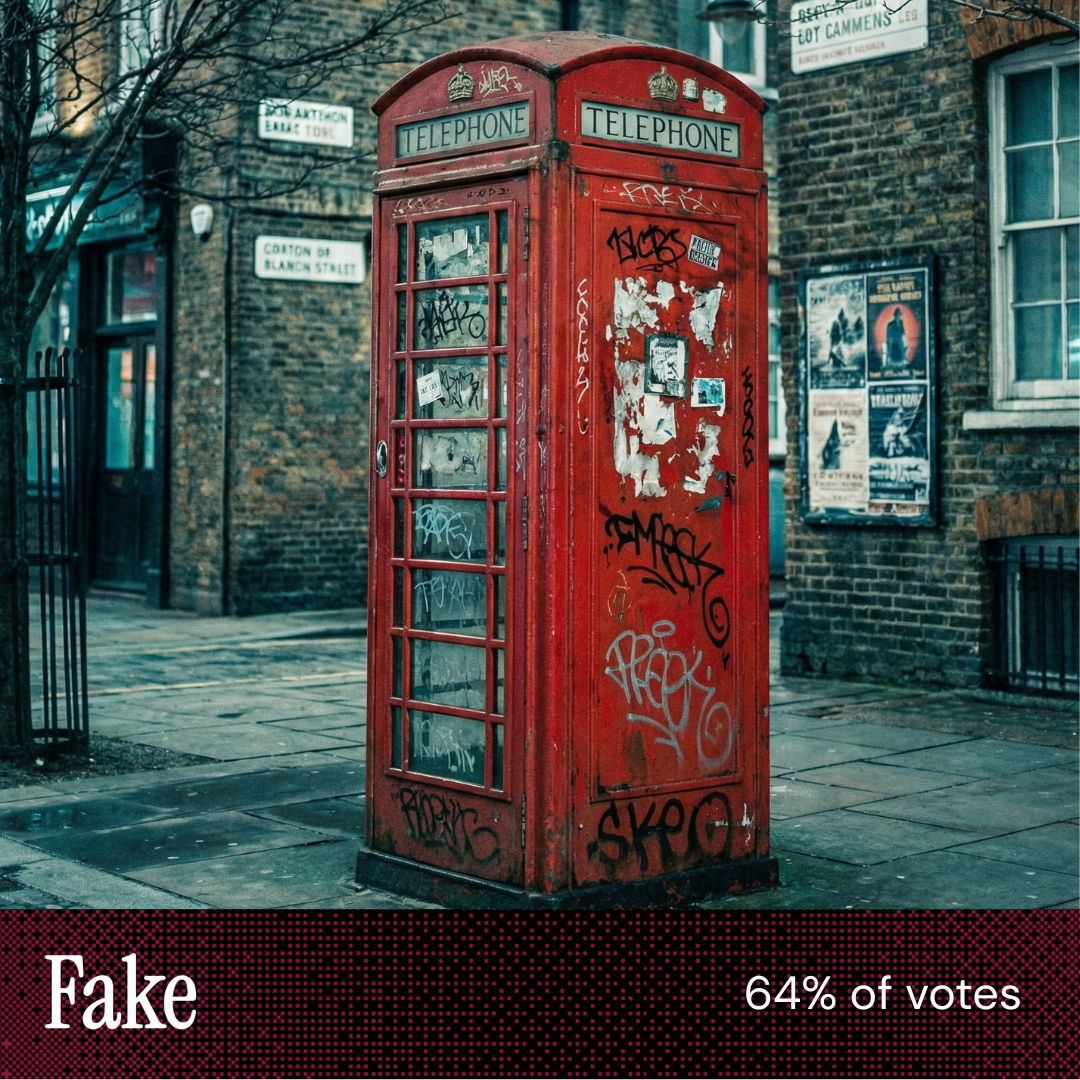

Will you stop using ChatGPT when it starts running ads?

Yes (46%)

No (34%)

Other (20%)

The Deep View is written by Nat Rubio-Licht, Sabrina Ortiz Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

“The text of [this image] was more legible and less nonsensical than the legible text of the [other] image. ('Corton of Blanon Street'?)” |

“The street signs in [this image] are gibberish.” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 750,000+ developers, business leaders and tech enthusiasts, get in touch with us here.